We are slowly rolling it out to all users. Please contact us at [founders@bolna.dev](mailto:founders@bolna.dev) for activation.

**please add an apostrophe (`'`) before the plus sign.**

## Extract calls details in structured JSON format By defining any relevant information you wish to extract from the conversation, you can use `Extraction prompt`. Post every call, you'll get this data in the [Execution](/api-reference/executions/get_execution) payload in `extracted_data` key. ### Example ```text extraction prompt user_name : Yield the name of the user. payment_mode : If user is paying by cash, yield cash. If they are paying by card yield card. Else yield NA payment_date: yield payment date by the user in YYYY-MM-DD format ``` ```json response ... ... "extracted_data": { "user_name": "Bruce", "payment_mode": "paypal, "payment_date": "2024-12-30" }, ... ... ``` # Bolna AI Updates for April, 2025 Source: https://docs.bolna.ai/changelog/april-2025 Explore the latest features, improvements, and API updates introduced in April 2025 for Bolna Voice AI agents.

* Users can now opt for auto recharge.

* Users can now opt for auto recharge.

## Improvements

* Latency improvements across the AI voice call stack.

* Execution pages now support filters and column selections.

## Improvements

* Latency improvements across the AI voice call stack.

* Execution pages now support filters and column selections.

When a user interrupts the AI agent mid-conversation, rather than logging the full transcript generated by Large Language Models (LLMs), Bolna intelligently computes the actual transcript by filtering out incomplete or overridden responses. This enhances clarity, ensuring that only the final, meaningful exchange is stored, processed and used for the conversations.

## How It Works

Bolna AI’s interruption handling system functions through a three-step process:

* **Detection of Interruptions**: The system continuously monitors speech input to detect when the user starts speaking while the Voice agent is still speaking.

* **Contextual Computation**: Whenever an interruption is detected, Bolna AI determines whether the user’s input should overrides the Voice agent's response.

* **Final Transcript Adjustment**: Bolna then reconstructs the conversation transcript to exclude everything after the interruption, ensuring that only the final & meaningful parts of the dialogue are retained, processed and used for further processing.

## Example

| Without precise transcript generation | Using precise transcript generation |

| ------------------------------------------------------------------------------------------------------------------ | ---------------------------------------------------------------------------------------------------------------------- |

| **Assistant:** "Hello, Thank you for calling Wayne Enterprises. How can we help you today?" | **Assistant:** "Hello, Thank you for calling Wayne Enterprises. How can we help you today?" |

| **User:** "hello" | **User:** "hello" |

| **Assistant:** "Hello! How can I assist you today?" | **Assistant:** "Hello! How can I ~~assist you today?~~" |

| **User:** "yeah where are you calling from" | **User:** "yeah where are you calling from" |

| **Assistant:** "I'm here to support you regarding your recent order from Wayne Enterprises. How can I assist you?" | **Assistant:** "I'm here to support you regarding your recent order ~~from Wayne Enterprises. How can I assist you?~~" |

| **User:** "yeah i'm facing an issue with the item i purchased" | **User:** "yeah i'm facing an issue with the item i purchased" |

| ... | ... |

When a user interrupts the AI agent mid-conversation, rather than logging the full transcript generated by Large Language Models (LLMs), Bolna intelligently computes the actual transcript by filtering out incomplete or overridden responses. This enhances clarity, ensuring that only the final, meaningful exchange is stored, processed and used for the conversations.

## How It Works

Bolna AI’s interruption handling system functions through a three-step process:

* **Detection of Interruptions**: The system continuously monitors speech input to detect when the user starts speaking while the Voice agent is still speaking.

* **Contextual Computation**: Whenever an interruption is detected, Bolna AI determines whether the user’s input should overrides the Voice agent's response.

* **Final Transcript Adjustment**: Bolna then reconstructs the conversation transcript to exclude everything after the interruption, ensuring that only the final & meaningful parts of the dialogue are retained, processed and used for further processing.

## Example

| Without precise transcript generation | Using precise transcript generation |

| ------------------------------------------------------------------------------------------------------------------ | ---------------------------------------------------------------------------------------------------------------------- |

| **Assistant:** "Hello, Thank you for calling Wayne Enterprises. How can we help you today?" | **Assistant:** "Hello, Thank you for calling Wayne Enterprises. How can we help you today?" |

| **User:** "hello" | **User:** "hello" |

| **Assistant:** "Hello! How can I assist you today?" | **Assistant:** "Hello! How can I ~~assist you today?~~" |

| **User:** "yeah where are you calling from" | **User:** "yeah where are you calling from" |

| **Assistant:** "I'm here to support you regarding your recent order from Wayne Enterprises. How can I assist you?" | **Assistant:** "I'm here to support you regarding your recent order ~~from Wayne Enterprises. How can I assist you?~~" |

| **User:** "yeah i'm facing an issue with the item i purchased" | **User:** "yeah i'm facing an issue with the item i purchased" |

| ... | ... |

This data is seamlessly injected into the Voice AI agent's prompt so every interaction feels personalized, targeted and on-point.

## 1. Internal API Integration (Real-Time Lookup)

Perfect for teams with existing databases.

* Provide an API endpoint that accepts the caller’s phone number.

* We automatically send the following information to this API which your application can consume and use:

1. the incoming caller's `contact_number`

2. the `agent_id` to identify the agent

3. the `execution_id` to identify the unique call

This data is seamlessly injected into the Voice AI agent's prompt so every interaction feels personalized, targeted and on-point.

## 1. Internal API Integration (Real-Time Lookup)

Perfect for teams with existing databases.

* Provide an API endpoint that accepts the caller’s phone number.

* We automatically send the following information to this API which your application can consume and use:

1. the incoming caller's `contact_number`

2. the `agent_id` to identify the agent

3. the `execution_id` to identify the unique call

For example: `https://api.your-domain.com/api/customers?contact_number=+19876543210&agent_id=06f64cb2-31cd-49eb-8f81-5be803e12163&execution_id=c4be1d0b-c6bd-489e-9d38-9c15b80ff87c`

* We’ll call this API when a call comes in.

* The returned data (JSON) is automatically merged into the AI prompt just before the call.

For example: `https://api.your-domain.com/api/customers?contact_number=+19876543210&agent_id=06f64cb2-31cd-49eb-8f81-5be803e12163&execution_id=c4be1d0b-c6bd-489e-9d38-9c15b80ff87c`

* We’ll call this API when a call comes in.

* The returned data (JSON) is automatically merged into the AI prompt just before the call.

```

contact_number,first_name,last_name

+11231237890,Bruce,Wayne

+91012345678,Bruce,Lee

+00021000000,Satoshi,Nakamoto

+44999999007,James,Bond

```

## 3. Google Sheets Integration

The best of both worlds: real-time sync with spreadsheet simplicity.

* Link a **publicly accessible** Google Sheet with user data and their details.

* Bolna agents auto-syncs and looks up for the incoming number to pull the latest data associated with that phone number.

* Your Google Sheet can continue updating the data —no re-uploads needed. Bolna agents will pick up the real-time available data automatically.

```

contact_number,first_name,last_name

+11231237890,Bruce,Wayne

+91012345678,Bruce,Lee

+00021000000,Satoshi,Nakamoto

+44999999007,James,Bond

```

## 3. Google Sheets Integration

The best of both worlds: real-time sync with spreadsheet simplicity.

* Link a **publicly accessible** Google Sheet with user data and their details.

* Bolna agents auto-syncs and looks up for the incoming number to pull the latest data associated with that phone number.

* Your Google Sheet can continue updating the data —no re-uploads needed. Bolna agents will pick up the real-time available data automatically.

| contact\_number | first\_name | last\_name |

| --------------- | ----------- | ---------- |

| +11231237890 | Bruce | Wayne |

| +91012345678 | Bruce | Lee |

| +00021000000 | Satoshi | Nakamoto |

| +44999999007 | James | Bond |

# Multilingual Language Support in Bolna Voice AI

Source: https://docs.bolna.ai/customizations/multilingual-languages-support

Discover how Bolna Voice AI supports multiple languages. Enable global interactions with multilingual capabilities tailored to your audience.

| contact\_number | first\_name | last\_name |

| --------------- | ----------- | ---------- |

| +11231237890 | Bruce | Wayne |

| +91012345678 | Bruce | Lee |

| +00021000000 | Satoshi | Nakamoto |

| +44999999007 | James | Bond |

# Multilingual Language Support in Bolna Voice AI

Source: https://docs.bolna.ai/customizations/multilingual-languages-support

Discover how Bolna Voice AI supports multiple languages. Enable global interactions with multilingual capabilities tailored to your audience.

## List of languages supported on Bolna | Language | Language code | BCP Format | | ------------------------ | ------------- | ---------- | | Arabic | ar | ar-AE | | Bengali | bn | bn-IN | | Bulgarian | bg | bg-BG | | Catalan | ca | ca-ES | | Czech | cs | cs-CZ | | Danish | da | da-DK | | Dutch | nl | nl-NL | | English (Australia) | en-AU | en-AU | | English + French | multi-fr | multi-fr | | English + German | multi-de | multi-de | | English + Hindi | multi-hi | multi-hi | | English (India) | en-IN | en-IN | | English (New Zealand) | en-NZ | en-NZ | | English + Spanish | multi-es | multi-es | | English (United Kingdom) | en-GB | en-GB | | English (United States) | en | en-US | | Estonian | et | et-EE | | Finnish | fi | fi-FI | | Flemish | nl-BE | nl-BE | | French | fr | fr-FR | | French (Canada) | fr | fr-CA | | German | de | de-DE | | German (Switzerland) | de-CH | de-CH | | Greek | el | el-GR | | Gujarati | gu | gu-IN | | Hindi | hi | hi-IN | | Hungarian | hu | hu-HU | | Indonesian | id | id-ID | | Italian | it | it-IT | | Japanese | ja | ja-JP | | Kannada | kn | kn-IN | | Khmer (Cambodia) | km | km-KH | | Korean | ko | ko-KR | | Latvian | lv | lv-LV | | Lithuanian | lt | lt-LT | | Malay | ms | ms-MY | | Malayalam | ml | ml-IN | | Marathi | mr | mr-IN | | Norwegian | no | nb-NO | | Polish | pl | pl-PL | | Portuguese (Brazil) | pt-BR | pt-BR | | Portuguese (Portugal) | pt | pt-PT | | Punjabi (India) | pa | pa-IN | | Romanian | ro | ro-RO | | Russian | ru | ru-RU | | Slovak | sk | sk-SK | | Spanish | es | es-ES | | Swedish | sv | sv-SE | | Tamil | ta | ta-IN | | Telugu | te | te-IN | | Thai | th | th-TH | | Turkish | tr | tr-TR | | Ukrainian | uk | uk-UA | | Vietnamese | vi | vi-VN | ## Prompting Guide We've put together a document outlining best practices and recommendations with example prompts alongwith common mistaks to avoid. You can go through the [guide for writing prompts in non-english languages](/guides/writing-prompts-in-non-english-languages). # Using Custom LLMs with Bolna Voice AI Source: https://docs.bolna.ai/customizations/using-custom-llm Integrate custom large language models (LLMs) into Bolna Voice AI to enhance agent capabilities and tailor responses to your unique requirements

2. From the dropdown click on `Add your own LLM`.

2. From the dropdown click on `Add your own LLM`.

3. A dialog box will be displayed. Fill in the following details:

* `LLM URL`: the endpoint of your custom LLM

* `LLM Name`: a name for your custom LLM

click on `Add Custom LLM` to connect this LLM to Bolna

3. A dialog box will be displayed. Fill in the following details:

* `LLM URL`: the endpoint of your custom LLM

* `LLM Name`: a name for your custom LLM

click on `Add Custom LLM` to connect this LLM to Bolna

4. **Refresh the page**

5. In the LLM settings tab, choose `Custom` in the first dropdown to select LLM Providers

4. **Refresh the page**

5. In the LLM settings tab, choose `Custom` in the first dropdown to select LLM Providers

6. In the LLM settings tab, you'l now see your custom LLM model name appearing. Select this and save the agent.

6. In the LLM settings tab, you'l now see your custom LLM model name appearing. Select this and save the agent.

**Using the above steps will make sure the agent uses your Custom LLM URL**.

## Demo video

Here's a working video highlighting the flow:

**Using the above steps will make sure the agent uses your Custom LLM URL**.

## Demo video

Here's a working video highlighting the flow:

# Terminate Bolna Voice AI calls

Source: https://docs.bolna.ai/disconnect-calls

Optimize call lengths with Bolna Voice AI by setting duration limits. Automatically terminate calls exceeding limits for better resource management.

## Terminate calls

### Terminating live calls

# Terminate Bolna Voice AI calls

Source: https://docs.bolna.ai/disconnect-calls

Optimize call lengths with Bolna Voice AI by setting duration limits. Automatically terminate calls exceeding limits for better resource management.

## Terminate calls

### Terminating live calls

| Call Type | Compatibiliy and support | | -------------- | ------------------------ | | Outbound calls | ✓ | | Inbound calls | ✓ | Users can configurea time limit for calls which allows them to define the maximum duration (in seconds) for a call. Once the set time limit is reached, the system will automatically terminate the call, ensuring calls are restricted to a predefined duration. This is useful for users to protect their calls and bills in cases where due to some reason the calls go on for long durations. # Bolna AI On-Prem for Enterprise Source: https://docs.bolna.ai/enterprise/on-premise-deployments Discover Bolna Enterprise solutions for large-scale businesses, offering scalable Voice AI agents, advanced integrations, and custom seamless solutions. **Bolna AI On-Prem** empowers your organization to deploy our best-in-class voice AI infrastructure. It is fully containerized and runs entirely within your cloud or data center. Designed for high-security, high-performance workloads, it's ideal for industries with stringent data requirements.

## Adding Guardrails

> ✅ कैसे हो? > ❌ Como estas?

> ✅ ¿Cómo estás? ## FAQs

You can add a `silence time` threshold which allows you to set a configurable threshold (in seconds) for detecting user inactivity during a call. If no audio is detected from the user for the specified duration, the call will automatically disconnect. This helps streamline conversations and prevent unnecessary call durations. ### 2. Using prompts to hangup calls

You can choose to add a custom prompt which determines whether to disconnect the call or not.

# Importing your voices to use with Bolna Voice AI Source: https://docs.bolna.ai/import-voices Easily import voices from multiple providers like ElevenLabs, Cartesia including custom voices into Bolna for seamless voice agent personalization. ## Importing voices using dashboard 1. Navigate to [Voice lab](https://platform.bolna.ai/voices) in the dashboard and click `Import Voices`.

2. Select your Voice Provider from the list.

2. Select your Voice Provider from the list.

3. Provide the `Voice ID` which you want to import onto Bolna:

3. Provide the `Voice ID` which you want to import onto Bolna:

4. Enable import from your own connected account if you wish to import any custom voice or your own account voice

4. Enable import from your own connected account if you wish to import any custom voice or your own account voice

5. Click on `Import`. Your voice will get imported and enabled for your account within seconds!

5. Click on `Import`. Your voice will get imported and enabled for your account within seconds!

# Home

Source: https://docs.bolna.ai/index

Create and deploy Conversational Voice AI Agents

export const HeroCard = ({iconName, title, description, href}) => {

return

# Home

Source: https://docs.bolna.ai/index

Create and deploy Conversational Voice AI Agents

export const HeroCard = ({iconName, title, description, href}) => {

return

{title}

{description}

; };Bolna Voice AI Documentation

Learn how to create conversational voice agents with Bolna AI to **qualify leads**, **boost sales**, **automate customer support**, and **streamline recruitment and hiring**.

There might be some lag (\~2-3 minutes) for receiving `completed` event since processing of call data and the recordings might take some time) | ## List of unanswered call status | Event name | Description | | ------------- | ------------------------------------------------------------ | | `balance-low` | The call cannot be initiated since your Bolna balance is low | | `busy` | The callee was busy | | `no-answer` | The phone was ringing but the callee did not answer the call | ## List of unsuccessful call status | Event name | Description | | ---------- | ---------------------------------------------------------------------------------- | | `canceled` | The call was canceled | | `failed` | The call failed | | `stopped` | The call was stopped by the user or due to no response from the telephony provider | | `error` | An error occured while placing the call | The payloads for all the above events will follow the same structure as that of [Agent Execution](api-reference/executions/get_execution) response.

### Method 2. Connect your Telephony account and use your own phone numbers.

2. See your **Account limits**

2. See your **Account limits**

# Platform Concepts

Source: https://docs.bolna.ai/platform-concepts

An overview of the various components that make up Bolna Voice AI agents, along with the key tasks these agents are designed to perform.

### Agent:

Bolna helps you create AI Agents which can be instructed to do tasks beginning with:

* An Input medium

* For voice based conversations the `agent` input could be a microphone or a phone call

* For text based conversations the `agent` could take inputs via keyboard

* For visual based conversations the `agent` could take inputs in the form of images (Coming soon)

* An ASR

* ASR converts the input to a LLM compatible format so it can pass it to the chosen LLM

* A LLM

* LLM takes the input from ASR and generates the appropriate response and passes it to the TTS or Image Generation model depending on the ttype of conversation the Agent is being built for

* A TTS / Image Generation Model

* Takes the LLM response and generates a compatible output to pass on to the output component

* An Output component

* Similar to the input component, this will pass the compatible text/voice/image to the output medium

### Tasks

Bolna provides the functionality to instruct your `agent` to execute tasks once the conversation has ended.

* Summarization task

* Extraction task

* Function tools

# Bolna Playground Overview

Source: https://docs.bolna.ai/playground

Learn to create, modify, and test Bolna Voice AI agents using the various capabilities like Agent Setup, Executions, Batches, Voice Lab, Phone Numbers and more.

# Platform Concepts

Source: https://docs.bolna.ai/platform-concepts

An overview of the various components that make up Bolna Voice AI agents, along with the key tasks these agents are designed to perform.

### Agent:

Bolna helps you create AI Agents which can be instructed to do tasks beginning with:

* An Input medium

* For voice based conversations the `agent` input could be a microphone or a phone call

* For text based conversations the `agent` could take inputs via keyboard

* For visual based conversations the `agent` could take inputs in the form of images (Coming soon)

* An ASR

* ASR converts the input to a LLM compatible format so it can pass it to the chosen LLM

* A LLM

* LLM takes the input from ASR and generates the appropriate response and passes it to the TTS or Image Generation model depending on the ttype of conversation the Agent is being built for

* A TTS / Image Generation Model

* Takes the LLM response and generates a compatible output to pass on to the output component

* An Output component

* Similar to the input component, this will pass the compatible text/voice/image to the output medium

### Tasks

Bolna provides the functionality to instruct your `agent` to execute tasks once the conversation has ended.

* Summarization task

* Extraction task

* Function tools

# Bolna Playground Overview

Source: https://docs.bolna.ai/playground

Learn to create, modify, and test Bolna Voice AI agents using the various capabilities like Agent Setup, Executions, Batches, Voice Lab, Phone Numbers and more.

## Bolna Playground Overview

### 1. Agent Setup

This is your home page! [Create, modify or test your agents](https://platform.bolna.ai/)

### 2. Agent Executions

[See all conversations](https://platform.bolna.ai/agent-executions) carried out using your agents

### 3. Batches

Upload, Schedule and Manage outbound [calling campaigns](https://platform.bolna.ai/batches)

### 4. Voice Lab

[Experience and choose](https://platform.bolna.ai/voices) between hundreds of voices from multiple text-to-speech providers

### 5. Developers

[Create and manage API keys](https://platform.bolna.ai/developers) for accessing and building using Bolna hosted APIs

### 6. Providers

[Connect your own providers](https://platform.bolna.ai/providers) like Twilio, Plivo, OpenAI, ElevenLabs, Deepgram, etc.

### 7. Available Credits

Number of credits remaining (1 credit \~ 1c). Credits are consumer per conversation with your agent

### 8. Add more Credits

Replenish your credits (Contact us for enterprise discounts at scale)

### 9. Read API Docs to carry out all actions (plus more) using APIs

Link to the API reference

### 10. Join our Discord Community and give us a star on github!

## Bolna Playground Overview

### 1. Agent Setup

This is your home page! [Create, modify or test your agents](https://platform.bolna.ai/)

### 2. Agent Executions

[See all conversations](https://platform.bolna.ai/agent-executions) carried out using your agents

### 3. Batches

Upload, Schedule and Manage outbound [calling campaigns](https://platform.bolna.ai/batches)

### 4. Voice Lab

[Experience and choose](https://platform.bolna.ai/voices) between hundreds of voices from multiple text-to-speech providers

### 5. Developers

[Create and manage API keys](https://platform.bolna.ai/developers) for accessing and building using Bolna hosted APIs

### 6. Providers

[Connect your own providers](https://platform.bolna.ai/providers) like Twilio, Plivo, OpenAI, ElevenLabs, Deepgram, etc.

### 7. Available Credits

Number of credits remaining (1 credit \~ 1c). Credits are consumer per conversation with your agent

### 8. Add more Credits

Replenish your credits (Contact us for enterprise discounts at scale)

### 9. Read API Docs to carry out all actions (plus more) using APIs

Link to the API reference

### 10. Join our Discord Community and give us a star on github!

1. Choose your agent and batch (if required) of conversations you want to analyse

2. Learn how to call all details using simple APIs. You can use this to link your analytics with your database. Contact us if you need our services in connecting these

3. Columns of executions table

* `exectuion_id` is a unique ID given to each conversation

* Conversation type is between `Websocket chat`, or `telephony`

* `Duration` is duration of conversation from start to end

* `Cost in credits` is the total spent credits for that conversation

4. Clicking on conversation details opens a tab where you can see the following for each conversation

* `Recording` of the call

* `Transcrip`t of the call

* `Summary` / `Extraction` of the call (if set up in Tasks)

# Creating your Bolna Voice AI agent

Source: https://docs.bolna.ai/playground/agent-setup

Step-by-step guide to creating, importing, and managing your Bolna Voice AI agents within the Playground.

1. Choose your agent and batch (if required) of conversations you want to analyse

2. Learn how to call all details using simple APIs. You can use this to link your analytics with your database. Contact us if you need our services in connecting these

3. Columns of executions table

* `exectuion_id` is a unique ID given to each conversation

* Conversation type is between `Websocket chat`, or `telephony`

* `Duration` is duration of conversation from start to end

* `Cost in credits` is the total spent credits for that conversation

4. Clicking on conversation details opens a tab where you can see the following for each conversation

* `Recording` of the call

* `Transcrip`t of the call

* `Summary` / `Extraction` of the call (if set up in Tasks)

# Creating your Bolna Voice AI agent

Source: https://docs.bolna.ai/playground/agent-setup

Step-by-step guide to creating, importing, and managing your Bolna Voice AI agents within the Playground.

1. **Create an agent** - Don’t forget to click on ‘Create Agent’ on the right to complete creation

2. **Import agent** - Put in an agent link and import a pre-built agent. For example, `https://bolna.ai/a/e3602854-ed7b-49da-a329-99f53710a0d7`

3. List of all agents that you have created.

4. **Share agent** - Get a link that you can share with the world (They can import your agent)

5. Get agent link that can be pasted in your [Twilio account to set up an inbound agent](/receiving-incoming-calls)

6. Start outbound calls by entering numbers (including country code) in the `recipient` textbox.

7. Schedule your calls or batches from the [Batches](https://platform.bolna.ai/agent-executions) tab

8. See all Executions in the [Agent Executions](https://platform.bolna.ai/agent-executions) tab

9. `Save Agent` - Your changes will only be reflected in conversations after you click on save agent

10. Test your agent’s intelligence and responses by chatting with it on this screen using our chat option

# Agents Tab

Source: https://docs.bolna.ai/playground/agent-tab

Central hub for creating, modifying, and testing your Bolna Voice AI agents, including prompt customization and variable management.

1. **Create an agent** - Don’t forget to click on ‘Create Agent’ on the right to complete creation

2. **Import agent** - Put in an agent link and import a pre-built agent. For example, `https://bolna.ai/a/e3602854-ed7b-49da-a329-99f53710a0d7`

3. List of all agents that you have created.

4. **Share agent** - Get a link that you can share with the world (They can import your agent)

5. Get agent link that can be pasted in your [Twilio account to set up an inbound agent](/receiving-incoming-calls)

6. Start outbound calls by entering numbers (including country code) in the `recipient` textbox.

7. Schedule your calls or batches from the [Batches](https://platform.bolna.ai/agent-executions) tab

8. See all Executions in the [Agent Executions](https://platform.bolna.ai/agent-executions) tab

9. `Save Agent` - Your changes will only be reflected in conversations after you click on save agent

10. Test your agent’s intelligence and responses by chatting with it on this screen using our chat option

# Agents Tab

Source: https://docs.bolna.ai/playground/agent-tab

Central hub for creating, modifying, and testing your Bolna Voice AI agents, including prompt customization and variable management.

1. **Text-to-speech Voice** - Shortcut to select voice (Can also be done from the Voices tab)

2. **LLM** - Shortcut to select LLM (can also be done from the LLM tab)

3. Scroll between all tabs

4. **Agent Welcome Message** - This is the first message that the agent will speak as soon as the call is picked up. This message can also be interrupted by the user. (Hot tip : Unless you have a clear announcement / disclaimer to start with, keep this message short - `Hello!`)

5. **Agent Prompt** - This is the text box in which you will write the entire prompt that your agent will follow.

Make sure your prompt is clear and to the point (Hot tip : if you have a transcript, or a rough prompt in mind, access our Custom GPT, add your transcript / thoughts in there and you will get a refined prompt that you can use - [https://chatgpt.com/g/g-7hDrhJaDl-bolna-bot-builder](https://chatgpt.com/g/g-7hDrhJaDl-bolna-bot-builder)

6. **Variables** - Whenever you write as a `{variable}` this becomes a custom variable that you can assign. Whatever you write in the variable text box will be what the agent considers when conversing. For example, in the prompt you can write `You are speaking to {name}` and in the text box, write Rahul to tell the agent who they are speaking with

# Call Tab

Source: https://docs.bolna.ai/playground/call-tab

Manage telephony settings, including providers, call hangup prompts, and termination time limits to be used for phone calls with Bolna Voice AI agents.

1. **Text-to-speech Voice** - Shortcut to select voice (Can also be done from the Voices tab)

2. **LLM** - Shortcut to select LLM (can also be done from the LLM tab)

3. Scroll between all tabs

4. **Agent Welcome Message** - This is the first message that the agent will speak as soon as the call is picked up. This message can also be interrupted by the user. (Hot tip : Unless you have a clear announcement / disclaimer to start with, keep this message short - `Hello!`)

5. **Agent Prompt** - This is the text box in which you will write the entire prompt that your agent will follow.

Make sure your prompt is clear and to the point (Hot tip : if you have a transcript, or a rough prompt in mind, access our Custom GPT, add your transcript / thoughts in there and you will get a refined prompt that you can use - [https://chatgpt.com/g/g-7hDrhJaDl-bolna-bot-builder](https://chatgpt.com/g/g-7hDrhJaDl-bolna-bot-builder)

6. **Variables** - Whenever you write as a `{variable}` this becomes a custom variable that you can assign. Whatever you write in the variable text box will be what the agent considers when conversing. For example, in the prompt you can write `You are speaking to {name}` and in the text box, write Rahul to tell the agent who they are speaking with

# Call Tab

Source: https://docs.bolna.ai/playground/call-tab

Manage telephony settings, including providers, call hangup prompts, and termination time limits to be used for phone calls with Bolna Voice AI agents.

1. Our telephony provider partnerships are with [Twilio](/twilio) and [Plivo](/plivo). They support both inbound and outbound calling

2. **Call hangup** - Use a prompt or silence timer to instruct the agent when to end the call. Make sure your prompt is very clear and to the point to avoid chances of the agent ending the call at the wrong time

3. **Call termination** - Choose a max time limit for each call, beyond which the call will automatically get cut

# Functions Tab

Source: https://docs.bolna.ai/playground/functions-tab

Integrate function calling capabilities, such as appointment scheduling and call transfers, into your Bolna Voice AI agents.

1. Our telephony provider partnerships are with [Twilio](/twilio) and [Plivo](/plivo). They support both inbound and outbound calling

2. **Call hangup** - Use a prompt or silence timer to instruct the agent when to end the call. Make sure your prompt is very clear and to the point to avoid chances of the agent ending the call at the wrong time

3. **Call termination** - Choose a max time limit for each call, beyond which the call will automatically get cut

# Functions Tab

Source: https://docs.bolna.ai/playground/functions-tab

Integrate function calling capabilities, such as appointment scheduling and call transfers, into your Bolna Voice AI agents.

1. Choose [desired functions](/function-calling), customise and add

2. Connect using [cal.com](https://cal.com) API (Calendly / Google Calendar coming soon) to check availability of slots for selected event type

3. Transfer call to one (or multiple) human phone numbers on meeting decided conditions. Make sure your prompts are clear to avoid chances of agent transferring calls when not necessary

4. Book appointments in free slots using [cal.com](https://cal.com) API (Calendly / Google Calendar coming soon)

# LLM Tab

Source: https://docs.bolna.ai/playground/llm-tab

Configure Large Language Model (LLM) settings for your agents, including provider selection, token limits, and response creativity.

1. Choose [desired functions](/function-calling), customise and add

2. Connect using [cal.com](https://cal.com) API (Calendly / Google Calendar coming soon) to check availability of slots for selected event type

3. Transfer call to one (or multiple) human phone numbers on meeting decided conditions. Make sure your prompts are clear to avoid chances of agent transferring calls when not necessary

4. Book appointments in free slots using [cal.com](https://cal.com) API (Calendly / Google Calendar coming soon)

# LLM Tab

Source: https://docs.bolna.ai/playground/llm-tab

Configure Large Language Model (LLM) settings for your agents, including provider selection, token limits, and response creativity.

1. **Choose your LLM Provider** - (`OpenAI`, `DeepInfra`, `Groq`) and respective model (`gpt-4o`, `Meta Llama 3 70B instruct`, `Gemma - 7b`, etc.)

2. **Tokens** - Increasing this number enables longer responses to be queued before sending to the synthesiser but slightly increases latency

3. **Temperature** - Increasing temperature enables heightened creativity, but increases chance of deviation from prompt. Keep temperature as low if you want more control over how your AI will converse

4. **Filler words** - reduce perceived latency by smarty responding `<300ms` after user stops speaking, but recipients can feel that the AI agent is not letting them complete their sentence

# Tasks Tab

Source: https://docs.bolna.ai/playground/tasks-tab

Add follow-up tasks like conversation summaries, information extraction, and custom webhooks for post-call actions to be performed by Bolna Voice AI agents.

1. **Choose your LLM Provider** - (`OpenAI`, `DeepInfra`, `Groq`) and respective model (`gpt-4o`, `Meta Llama 3 70B instruct`, `Gemma - 7b`, etc.)

2. **Tokens** - Increasing this number enables longer responses to be queued before sending to the synthesiser but slightly increases latency

3. **Temperature** - Increasing temperature enables heightened creativity, but increases chance of deviation from prompt. Keep temperature as low if you want more control over how your AI will converse

4. **Filler words** - reduce perceived latency by smarty responding `<300ms` after user stops speaking, but recipients can feel that the AI agent is not letting them complete their sentence

# Tasks Tab

Source: https://docs.bolna.ai/playground/tasks-tab

Add follow-up tasks like conversation summaries, information extraction, and custom webhooks for post-call actions to be performed by Bolna Voice AI agents.

1. Generate a generic summary of the conversation

2. Extract structured information from the conversation.

1. Generate a generic summary of the conversation

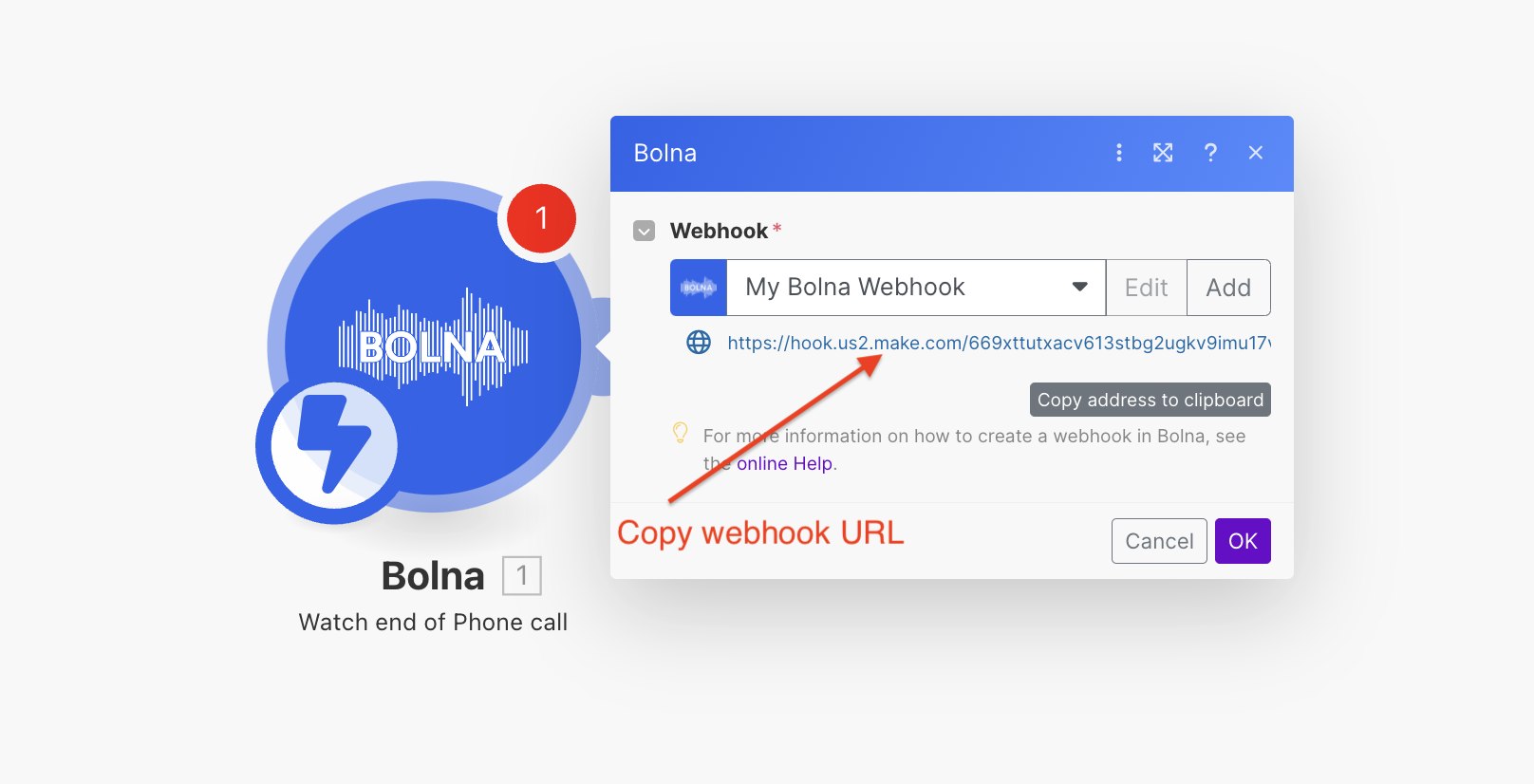

2. Extract structured information from the conversation. Write your prompt in the following template: ``` variable_name (example ; Payment_mode) : Clear actionable on what to yield and when (Yield the type of payment that the user agrees to pay with). Actionable could be open-ended or classified (If user wants to pay by cash, yield cash. Else yield NA) ``` 3. Create your own webhook to ingest or send out information post closure of conversation # Transcriber Tab Source: https://docs.bolna.ai/playground/transcriber-tab Set up Speech-to-Text (STT) configurations, choose transcriber providers, and define language and endpointing settings to be set for Bolna Voice AI agents.

1. Choose your **Transcriber Provider** and **model**

* Deepgram (Default transcriber, most tried and tested)

* Whisper (open-source, cheapest)

2. **Language** - By default the agent can only transcribe English language. By choosing any other language, the agent will be able to transcribe sentences spoken in chosen language + English

3. **Endponting** - Number of milliseconds your agent will wait before generating response. Lower endpointing reduces latency could lead to agent interrupting mid-sentence. If you want quick short responses, keep a low (`100ms`) endpoint.

1. Choose your **Transcriber Provider** and **model**

* Deepgram (Default transcriber, most tried and tested)

* Whisper (open-source, cheapest)

2. **Language** - By default the agent can only transcribe English language. By choosing any other language, the agent will be able to transcribe sentences spoken in chosen language + English

3. **Endponting** - Number of milliseconds your agent will wait before generating response. Lower endpointing reduces latency could lead to agent interrupting mid-sentence. If you want quick short responses, keep a low (`100ms`) endpoint. If you are expecting users to speak longer sentences, keep a higher (`500ms`) endpoint. 4. **Linear Delay** - Linear delay accounts for long pauses mid-sentence. If the recipient is expected to speak long sentences, increase value of linear delay 5. **Interruption settings** - Agent will not consider interruption until human speaks these number of words. Ideal to prevent Agent pausing when human is actively listening by saying `Oh`, `yes` etc.

(If the user says a Stopword, such as `stop`, `wait`, etc., the agent will automatically pause regardless of the settings) 6. **Backchanneling** - Switch on only if user is expected to speak long sentences. Agent will show they are listening by give soft verbal nudges of acknowledgement.

You can change the time to wait before the agent gives the first filler, as well as the time between subsequent fillers # Voice Tab Source: https://docs.bolna.ai/playground/voice-tab Customize Text-to-Speech (TTS) settings, select voice providers, and adjust buffer sizes for optimal Bolna Voice AI agent performance.

1. Choose your **TTS Provider** and **Voice**

* `ElevenLabs` is the most realistic and costliest voice

* `Deepgram` and `Azure TTS` are the quickest and cheapest providers.

2. Play around with more voices from each provider in Voice Labs before finalising on the voice you want. Pressing the **play button** will enable your selected voice to speak out the `Welcome Message` that you have set

3. **Increasing buffer size** enables agent to speak long responses fluently, but increases latency. Buffer sizes of \~250 are ideal for most conversations

4. **Ambient noise** removes the pin-drop silence between a conversation and makes it more realistic. However, be careful not to let the background noise be a distraction

5. Agent will **check if user is still active in the call** after a fixed time that you can decide. You can customise the message the user will use to ask

# Enhance Call Capabilities with Bolna's Plivo Integration

Source: https://docs.bolna.ai/plivo

Integrate Plivo with Bolna to manage outbound and inbound calls. Access setup guides for seamless Voice AI agent communication using your Plivo numbers.

1. Choose your **TTS Provider** and **Voice**

* `ElevenLabs` is the most realistic and costliest voice

* `Deepgram` and `Azure TTS` are the quickest and cheapest providers.

2. Play around with more voices from each provider in Voice Labs before finalising on the voice you want. Pressing the **play button** will enable your selected voice to speak out the `Welcome Message` that you have set

3. **Increasing buffer size** enables agent to speak long responses fluently, but increases latency. Buffer sizes of \~250 are ideal for most conversations

4. **Ambient noise** removes the pin-drop silence between a conversation and makes it more realistic. However, be careful not to let the background noise be a distraction

5. Agent will **check if user is still active in the call** after a fixed time that you can decide. You can customise the message the user will use to ask

# Enhance Call Capabilities with Bolna's Plivo Integration

Source: https://docs.bolna.ai/plivo

Integrate Plivo with Bolna to manage outbound and inbound calls. Access setup guides for seamless Voice AI agent communication using your Plivo numbers.

2. Fill in the required details.

3. Save details by clicking on the **connect button**.

4. You'll see that your Plivo account was successfully connected. All your calls will now go via your own Plivo account and phone numbers.

# Initiate Outbound Calls via Plivo with Bolna Voice AI Source: https://docs.bolna.ai/plivo-outbound-calls Configure Bolna Voice AI agents to make outbound calls through Plivo. Learn to set up calls using the dashboard and APIs for effective outreach. ## Making outbound calls from dashboard 1. Login to the dashboard at [https://platform.bolna.ai](https://platform.bolna.ai) using your account credentials

2. Choose `Plivo` as the Call provider for your agent and save it

3. Start placing phone calls by providing the recipient phone numbers. Bolna will place the calls to the provided phone numbers.

Depends on the **duration of calls** (rounded to seconds).

Depends on the **total LLM tokens generated**.

Depends on the **characters**.

Depends on the **duration of calls** (rounded to minutes).

You can connect your own [Providers](/providers) like [Telephony](/supported-telephony-providers), [Transcriber](/providers/transcriber/deepgram), [LLMs](/providers/llm-model/openai), [Text-to-Speech](/providers/voice/aws-polly) and lower the costs. In that case Bolna will not charge for that component.

We connect all your `Provider` accounts securely via using [infisical](https://infisical.com/).

## Method 2. Connect your Telephony account and use your own phone numbers.

**outbound** and **inbound** calls * [Twilio](/twilio) * [Plivo](/plivo) * [Talk to us to include more](https://calendly.com/bolna/30min) # Prompting & best practices for Bolna Voice AI agents Source: https://docs.bolna.ai/tips-and-tricks Explore practical tips and tricks to enhance the performance of Bolna Voice AI agents. Master best practices for effective implementation. ### Agent Overview * Choosing between a Free flowing and an IVR agent * **Benefits of Free Flowing agents** : A free flowing agent requires a plain english prompt to create. It enables truly natural conversations and allows your agent to be creative when responding * **Harms of Free Flowing agents**: The harms of free flowing agents are that the prompt and settings often require fine-tuning to ensure you are getting desired responses. Free flowing agents are also costlier * **Benefits of IVR agents** : You have complete control over the exact sentences your IVR agent will say. IVR agents are also cheaper and have much no risk of deviation / hallucination * **Harms of IVR agents** : Conversations are limited to what you have defined in the IVR tree. The agent will try to map any response a user makes to the options that you have given it. This increases chances of the call seeming artificial. It also takes time to build an IVR tree * Choosing a Task * The quickest way of creating an agent is choosing a task and making small changes to the pre-defined template that we have set for you (Note: these agents are built to run on default settings. Changes in settings will require changing prompts) * If you want to start from scratch, choose Others as your task * Choosing invocation * Choose telephone only if you want your agent to make telephone calls (Note: Telephone calling is expensive and you will burn through your credits rapidly. We **strongly** suggest you to use our playground to thoroughly test your agent before initiating a call) ### Assigning Rules * Stay concise with your prompts. Use the 'Tips' to quickly build a prompt. Ideally start, with a clear, short prompt and keep adding details. * Prompt engineering takes time! Be patient if your agent does not follow your prompt the way you want it to * **Expert Tips** : For smart low-latency conversations, only use the Overview page (leave all pages blank). Clearly state your required intent, and start the prompt with the line "You will not speak more than 2 sentences" ### Assigning Follow-up tasks * Summary will give a short summary of all important points discussed in the conversation * Extraction allows you to specify what classifiers you want to pull from the conversation. Be clear in defining what you want to extract * For webhook, you will have to give a webhook url (e.g., Zapier). Your extraction prompt should trigger the task set through the webhook. ### Assigning Settings * Refer to this page for a detailed pricing + latency guide when assigning settings - * Make sure the voice you choose speaks the language that you have chosen * Only modify advanced settings if you have experience working with LLMs * **Expert Tips** : For smart low-latency conversations use these settings * Model: Dolphin-2.6-mixtral-8x7b * Language: en * Voice: Danielle (United States - English) * Max Tokens: 60 * Buffer Size : 100 # Booking Calendar Slots via Bolna Voice AI and Cal.com Integration Source: https://docs.bolna.ai/tool-calling/book-calendar-slots A comprehensive guide on how to book calendar slots during live calls using Bolna Voice AI integrated with Cal.com.

| Property | Description | | ----------- | ----------------------------------------------------------------------------------------------------------------------------------------- | | Description | Clearly describe the tool's purpose and try to

**make it as descriptive as possible for the LLM model to execute it successfully**. | | API key | You Cal.com API key ([generate here](https://app.cal.com/settings/developer/api-keys)) | | Event | You Cal.com event | | Timezone | **Select the timezone** used in your Cal.com event. This **helps the agent compute times accurately** based on your local setting. |

# Implementing Custom Function Calls in Bolna Voice AI Agents Source: https://docs.bolna.ai/tool-calling/custom-function-calls Learn how to design and integrate custom function calls within Bolna Voice AI agents to enhance their capabilities.

You can design your own functions and use them in Bolna. Custom functions follow the [OpenAI specifications](https://platform.openai.com/docs/guides/function-calling). You can paste your valid JSON schema and define your own custom function.

## Steps to write your own custom function * Make sure the `key` is set as `custom_task`. * Write a good description for the function. This helps the model to intelligently decide to call the mentioned functions. * All parameter properties must be mentioned in the value param as a JSON and follow Python format specifiers like below | Param | Type | Variable | | ----------- | ------- | --------------- | | `user_name` | `str` | `%(user_name)s` | | `user_age` | `int` | `%(user_age)i` | | `cost` | `float` | `%(cost)%f` | ## More examples of writing custom function calls

| Property | Description | | ----------- | ---------------------------------------------------------------------------------------------------------------------------------------------- | | Description | Add description for fetching the slots.

**Try to make it as descriptive as possible for the LLM to execute this function successfully**. | | API key | You Cal.com API key ([generate here](https://app.cal.com/settings/developer/api-keys)) | | Event | You Cal.com event | | Timezone | **Select the timezone** used in your Cal.com event. This **helps the agent compute times accurately** based on your local setting. |

# Function Calling in Bolna Voice AI: Automate Workflows with Custom Functions

Source: https://docs.bolna.ai/tool-calling/introduction

Learn how to use function calling in Bolna Voice AI to automate complex workflows by integrating custom functions with your voice agents.

# Function Calling in Bolna Voice AI: Automate Workflows with Custom Functions

Source: https://docs.bolna.ai/tool-calling/introduction

Learn how to use function calling in Bolna Voice AI to automate complex workflows by integrating custom functions with your voice agents.

## Type of tools supported in Bolna AI

Using this, you can transfer on-going calls to another phone number depending on the description (prompt) provided. | Property | Description | | ------------------------ | ------------------------------------------------------------------------------------------------------------------------------------------------- | | Description | Description for your transfer call functionality.

**Make it as descriptive as possible for the LLM to execute this function successfully**. | | Transfer to Phone number | The phone number where the agent will transfer the call to |

Please note:

1. If you want to use any extraction details, it will be provided as a JSON under `"extracted_data"` as shown below. Please refer to [extracting conversation data](/call-details) for more details and using it.

```json using extracted content

...

...

"extracted_data": {

"address": "Market street, San francisco",

"salary_expected": "100k USD"

},

...

```

2. Any dynamic variables you pass for making the call, can be retrieved from `"context_details" > "recipient_data"` as shown below:

```json using user details

...

"context_details": {

"recipient_data": {

"name": "Harry",

"email": "harry@hogwarts.com"

},

"recipient_phone_number": "+19876543210"

},

...

```

Please note:

1. If you want to use any extraction details, it will be provided as a JSON under `"extracted_data"` as shown below. Please refer to [extracting conversation data](/call-details) for more details and using it.

```json using extracted content

...

...

"extracted_data": {

"address": "Market street, San francisco",

"salary_expected": "100k USD"

},

...

```

2. Any dynamic variables you pass for making the call, can be retrieved from `"context_details" > "recipient_data"` as shown below:

```json using user details

...

"context_details": {

"recipient_data": {

"name": "Harry",

"email": "harry@hogwarts.com"

},

"recipient_phone_number": "+19876543210"

},

...

```

Please note:

1. If you want to use call summary, it will be provided under `"summary"` as shown below.

```json using call summary

...

...

"summary": "The conversation was between a user named Harry and an agent named Charles from Alexa. Charles initiated the call to assist Harry with inquiries about Apple Service Center. Harry asked for the location of the closest service center, and Charles informed about Apple Union Square, 300 Post Street San Francisco. After receiving the information, Harry indicated he had no further questions, and Charles offered to help in the future before concluding the call.",

...

```

2. Any dynamic variables you pass for making the call, can be retrieved from `"context_details" > "recipient_data"` as shown below:

```json using user details

...

"context_details": {

"recipient_data": {

"name": "Harry",

"email": "harry@hogwarts.com"

},

"recipient_phone_number": "+19876543210"

},

...

```

Please note:

1. If you want to use call summary, it will be provided under `"summary"` as shown below.

```json using call summary

...

...

"summary": "The conversation was between a user named Harry and an agent named Charles from Alexa. Charles initiated the call to assist Harry with inquiries about Apple Service Center. Harry asked for the location of the closest service center, and Charles informed about Apple Union Square, 300 Post Street San Francisco. After receiving the information, Harry indicated he had no further questions, and Charles offered to help in the future before concluding the call.",

...

```

2. Any dynamic variables you pass for making the call, can be retrieved from `"context_details" > "recipient_data"` as shown below:

```json using user details

...

"context_details": {

"recipient_data": {

"name": "Harry",

"email": "harry@hogwarts.com"

},

"recipient_phone_number": "+19876543210"

},

...

```

Please note:

1. If you want to use any extraction details, it will be provided as a JSON under `"extracted_data"` as shown below. Please refer to [extracting conversation data](/call-details) for more details and using it.

```json using extracted content

...

...

"extracted_data": {

"salary_expected": "120K USD"

},

...

```

2. Any dynamic variables you pass for making the call, can be retrieved from `"context_details" > "recipient_data"` as shown below:

```json using user details

...

"context_details": {

"recipient_data": {

"name": "Harry",

"email": "harry@hogwarts.com"

},

"recipient_phone_number": "+19876543210"

},

...

```

Please note:

1. If you want to use any extraction details, it will be provided as a JSON under `"extracted_data"` as shown below. Please refer to [extracting conversation data](/call-details) for more details and using it.

```json using extracted content

...

...

"extracted_data": {

"salary_expected": "120K USD"

},

...

```

2. Any dynamic variables you pass for making the call, can be retrieved from `"context_details" > "recipient_data"` as shown below:

```json using user details

...

"context_details": {

"recipient_data": {

"name": "Harry",

"email": "harry@hogwarts.com"

},

"recipient_phone_number": "+19876543210"

},

...

```

Use this to make Outgoing Phone Calls to phone numbers

2. Fill in the required details.

3. Save details by clicking on the **connect button**.

4. You'll see that your Twilio account was successfully connected. All your calls will now go via your own Twilio account and phone numbers.

# Make Outbound Calls via Twilio with Bolna Voice AI Source: https://docs.bolna.ai/twilio-outbound-calls Set up Bolna Voice AI agents to place outbound calls through Twilio. Learn dashboard configurations and API methods for efficient call management. ## Making outbound calls from dashboard 1. Login to the dashboard at [https://platform.bolna.ai](https://platform.bolna.ai) using your account credentials

2. Choose `Twilio` as the Call provider for your agent and save it

3. Start placing phone calls by providing the recipient phone numbers. Bolna will place the calls to the provided phone numbers.

In **inbound calls**, this is the caller (e.g. customer).

In **outbound calls**, this is your agent's number. | | `to_number` | The phone number that **received** the call.

In **inbound calls**, this is your agent's number.

In **outbound calls**, this is the recipient's number (e.g. customer). | You may use the above information to pass useful info into your systems or use them in the function calls or prompts. ### Example using default variables For example, adding the below content in the prompt using the above default variables will automatically fill in their values. ```php This is your agent Sam. Please have a frienly conversation with the customer. Please note: The agent has a unique id which is "{agent_id}". The call's unique id is "{call_sid}". The customer's phone number is "{to_number}". ``` The above prompt content computes to and is fed as: ```php This is your agent Sam. Please have a frienly conversation with the customer. Please note: The agent has a unique id which is "4a8135ce-94fc-4a80-9a33-140fe1ed8ff5". The call's unique id is "PXNEJUFEWUEWHVEWHQFEWJ". The customer's phone number is "+19876543210". ``` *** ## Custom variables and context Apart from the default variables, you can write your own variables and pass it into the prompt. Any content written between `{}` in the prompt becomes a variable. For example, adding the below content in the prompt will dynamically fill in the values. ### Example using custom variables ``` This is your agent Sam speaking. May I confirm if your name is {customer_name} and you called us on {last_contacted_on} to enquire about your order item {product_name}. Use the call's id which is {call_sid} to automatically transfer the call to a human when the user asks. ``` You can now pass these values while placing the call: ```bash curl --request POST \ --url https://api.bolna.ai/call \ --header 'Authorization: Bearer

* Click the button `Upload` to upload a new PDF

* Ingesting your knowledgebase document

* Wait for few minutes while we work out our magic and process your uploaded document

## Using your uploaded knowledgebases in Agents * In the agent creation page, navigate to `LLM tab` and select the knowledgebase from the dropdown.